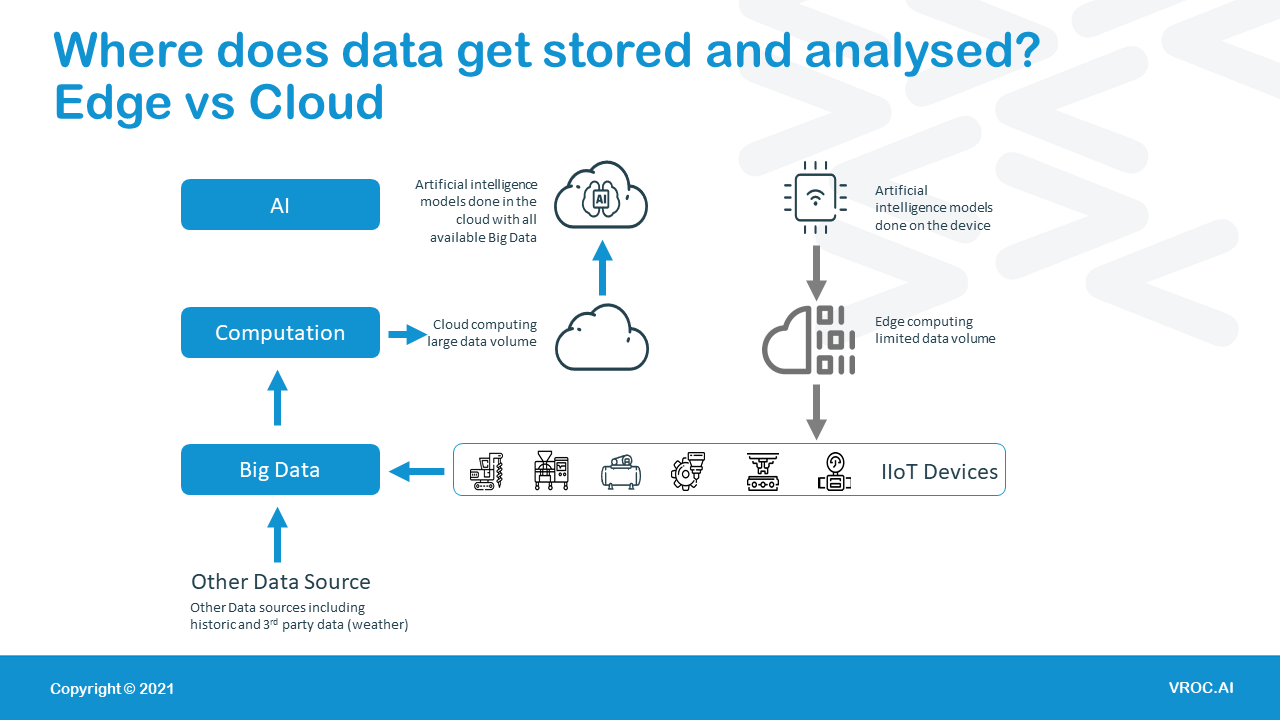

The main difference between cloud and edge is the volume of data that can be processed, the latency and therefore the depth and scope of the analytics able to be conducted.

Artificial intelligence on the edge or on the cloud. What is the difference, what are the benefits and use cases for each approach? Before we jump into the use cases, let’s go over the basic definitions of the technology.

The main difference between cloud and edge is the volume of data that can be processed, the latency and therefore the depth and scope of the analytics able to be conducted.

Let’s explore this statement more.

Edge AI is coming to the forefront in technology we use daily, such as virtual assistants & fitness trackers (though most implementations still use cloud solutions, the shift is to move to local speech/image processing). And looking to the future we’ll see it used increasingly for improved autonomous driving, such as activating an air bag before a crash or braking when someone suddenly steps out in front of a car by using advanced image recognition. We’ll also see it used for security intelligence and in greater use in the medical field.

For industry, Edge AI is showing potential for deep underground mine sites which have connectivity issues. Condition monitoring and alerts can be done using edge AI technology for critical equipment, helping operators obtain time critical insights of imminent failures and problems.

Underground mines are starting to experience the benefit of this technology, helping to improve safety of personnel by ensuring the reliability of critical machines which are required to run continuously.

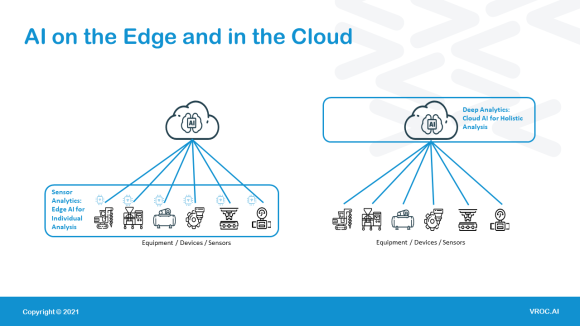

Edge AI applications are designed to learn, monitor, and predict the behaviours of specific equipment or a limited data field. The rapid results are due to the localisation of data being analysed, and the reduced volume of that data. Due to the speed at which the results are required (for example an imminent accident or threat), there is little time for the analytics to take place. The limited edge computing power also typically restricts the complexity and finality of the AI models in use, possibly reducing their capabilities to mostly statistical analysis. Predictive analysis requires constant retraining and tuning of model parameters which cannot be easily achieved at the edge.

Cloud AI or AI that takes place on a hosted server differs in that it can easily analyse vast amounts of data, even billions of data points. AI models can digest a wide range of data sources, taking a holistic view of a process, plant or even equipment and determine outlying contributing factors, which may not be factored in when using a localised Edge AI approach. Some of these factors may be external such as the weather or even un-connected processes at an industrial facility.

Cloud AI can also perform the same tasks as edge AI where high bandwidth connections are available. Common examples of this can be seen in services such as Google for image and speech recognition. But as more research is done, and hardware level AI acceleration is added to edge devices, some of the heavy lifting is moving to the edge. However, for the time being, training of anything but the simplest models is still performed in the cloud.

One VROC client was able to quickly deploy an AI model after discovering a high moisture reading, which could have led to a gas leak at their oil and gas platform. The AI model indicated that the root cause was a Gas Well, which is a very separate process and not inter-related at all to their issue. This client followed the recommendation and shut in this particular well, which resulted in the high moisture reading rapidly dropping and a significant safety incident being avoided. This client was able to determine the root cause because live data from multiple separate processes was being analysed simultaneously.

In this example the models ran on live and historical data and was able to give insights for a critical situation in a matter of minutes, helping this client avoid an incident which was estimated to cost close to one million USD. The benefit of a cloud-based AI platform in this situation, was that more data could be analysed, using more computing power and despite the volume of data, this analysis was still performed rapidly.

The use of historical data is also a key component when conducting artificial intelligence. Historical data can help improve model accuracy, as the AI can learn from similar historical conditions and failure modes. When combined with live data the AI can model and predict future conditions. Historical data is typically not stored at the edge, as there is limited storage available and therefore limits the complexity of the analysis that can be performed at the edge.

One other major difference worth noting is that between predictive and condition-based monitoring. Edge AI can detect conditions and thresholds which are either nearly met, met, or exceeded, making it more of a condition-based monitoring tool. Whilst it does provide for the most part an early notification, it may only be minutes or hours in advance. Whereas AI that is cloud based detects minute deviations and can start predicting issues often weeks in advance.

The more lead time, the more options operators have to plan interventions. Another VROC client was alerted 7 days in advance of a failure with its Water Pump. This lead time ensured that no downtime was experienced, and maintenance was a planned activity. This prediction resulted in an estimated savings of between $150,000 to $250,000 USD.

It is possible for an industrial business to benefit by using artificial intelligence both on the edge and in the cloud. In situations where connectivity is an issue and critical equipment needs to run continuously the Edge is a great way to access AI insights. Following on from this to further assist businesses with advanced time to failure, holistic root cause analysis across processes, and optimization insights enterprise wide, artificial intelligence can either be performed in the cloud or in a client’s own infrastructure.

VROC’s no-code rapidly deployed solution, OPUS, is unique in that it can be hosted using our own private data centre, on a client’s on-premises data centre, or on a client’s public cloud or by using the VROC Cube.

Interested in a demo, get in touch with our team.

Explore three early signs of equipment deviation that are commonly missed — and how real‑time, multivariate AI models can help reliability teams detect, diagnose, and act.

Read ArticleLearn the differences between predictive, preventive, and reactive maintenance. Compare costs, benefits, and risks

Read ArticleInterested in a demo of one of our data solution products?

DataHUB4.0 is our enterprise data historian solution, OPUS is our Auto AI platform and OASIS is our remote control solution for Smart Cities and Facilities.

Book your demo with our team today!

Complete the form below and we’ll connect you with the right VROC expert to discuss your project. Whether you’re launching a pilot, scaling AI across your enterprise, or integrating complex systems, we’ll help you turn your data into actionable insights—fast, efficiently, and with confidence.

The efficient deployment, continuous retraining of models with live data and monitoring of model accuracy falls under the categorisation called MLOps. As businesses have hundreds and even.

Learn more about DataHUB+, VROC's enterprise data historian and visualization platform. Complete the form to download the product sheet.

Learn how OASIS unifies your systems, streams real-time data, and gives you full control of your smart facility—remotely and efficiently. Complete the form to access the product sheet.

Discover how OPUS, VROC’s no-code Industrial AI platform, turns your operational data into actionable insights. Complete the form below to access the product sheet and learn how you can predict failures, optimise processes, and accelerate AI adoption across your facility.

Interested in reading the technical case studies? Complete the form and our team will be in touch with you.

Subscribe to our newsletter for quarterly VROC updates and industry news.